Well having decided to put together a small homelab and purchased a HP Microserver Gen 8 for the purpose I decided to go a head with VMware ESXi for the hypervisor, some thing new and we all like a challenge right?

Well its defintely been a challange in retrospec Hyper-V might have been easier but anyway I’ll get the process down well its still fresh in my mind.

Setup is currently the bog standard microserver, so 4gb Ram, celeron 2.3, 32GB USB stick and 2 X 2TB SATA drives… memory and processor upgrades yet to come.

Downloaded the HP specific version of ESXi 6.5 and mounted it via ILO, install is pretty stright forward, click next a few times, pick the USB stick as install location etc, happy days until you get the “you dont have over 4GB memory” (MEMORY_SIZE ERROR) error message and it kicks you out of the installer…

Eventually worked out a way around it with the following instructions from noteis.net

So when the memory size error occurs press ALT+F1 to drop you to the command line, login with root and blank password then

cd /usr/lib/vmware/weasel/util

rm upgrade_precheck.pyc

mv upgrade_precheck.py upgrade_precheck.py.old

cp upgrade_precheck.py.old upgrade_precheck.py

chmod 666 upgrade_precheck.py

vi upgrade_precheck.py (search for MEM_MIN_SIZE and find (4*1024). Replace 4* with 2* and close after saving.

ps -c | grep weasel ( find the python PID )

kill -9 upgrade_precheck.pyc didn’t exist on my install possibly being the HP version but I’ve left the step in there

When you kill python the installer restarts and you can get all the way through it, lovely job

So with the hypervisor installed time to play, started off with a Server 2016 install and so the issues began.

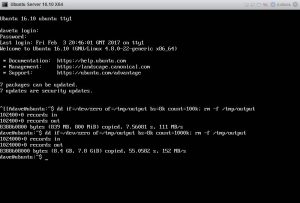

Wasn’t expect things to fly along on a celeron with 4GB but the install took over 3 hours, neither CPU or memory anywhere near maxed out, so looked to be a disk issue. In ESXi the max speed seen on the array was 30meg/s, installing ubuntu server and testing that showed an average of 5meg/s. Not great.

dd if=/dev/zero of=/tmp/output bs=8k count=100k; rm -f /tmp/outputHeaps of research managed to tie it down to to crap RAID drivers in a nut shell.

I gather current wisdom is to use the scsi-hpvsa-5.5.0-88 drivers in ESXi 6 and 6.5

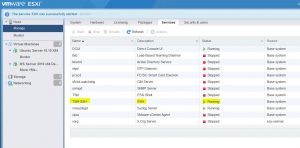

So you need to enable SSH in ESXi (host > Manage > Services > Enable SSH)

Then using filezilla or another FTP client FTP the scsi-hpvsa-5.5.0-88OEM.550.0.0.1331820.x86_64.vib across to /tmp

Stop all your VMs, enable maintenance mode either via the web GUI or SSH to your hypervisor and

esxcli system maintenanceMode set --enable trueThen SSH to the hypervisor

cd /tmp

cp scsi-hpvsa-5.5.0-88OEM.550.0.0.1331820.x86_64.vib /var/log/vmware/

# Remove the scsi-hpvsa driver

esxcli software vib remove -n scsi-hpvsa -f

#Install scsi-hpvsa-5.5.0-88

esxcli software vib install -v file:scsi-hpvsa-5.5.0-88OEM.550.0.0.1331820.x86_64.vib --force --no-sig-check --maintenance-mode

reboot

Rebooting and logging back into ESXi I found my data store gone along with both my VMs 🙁

Checking the raid driver I found it to now be on the ahci driver, so in essence we need to disable this to force it to roll back to our 5.5.0-88 driver.

Again ssh into the hypervisor and

esxcli system module set --enabled=false --module=vmw_ahciYou can check it worked via

esxcli system module listYou should end up with this

Reboot again run a disk speed check again in ubuntu.

I’ll take 150meg/s!!

So thats ESXi 6.5 up and running on a 4GB HP microserver, more challenging than I was expecting but have to say I’m liking ESXI so far.

Hi, I tried to follow these instructions. I use ESXI 6.5 and RAID 1. After the first restart show message: “Two filesystems with the same UUID have been detected. Make sure you do not have two ESXi installations.”

I found a solution https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=1035107.

After booting in the packages is version hpvsa 5.5.0-88. Next I set “esxcli system module set –enabled = false –module = vmw_ahci” and rebooted again. After rebooting is version hpvsa 5.5.0.102 again :/

Can you help me with this?

Did you remove the 5.5.0.102 driver and install 5.50.88 as per the below??

cd /tmp

cp scsi-hpvsa-5.5.0-88OEM.550.0.0.1331820.x86_64.vib /var/log/vmware/

# Remove the scsi-hpvsa driver

esxcli software vib remove -n scsi-hpvsa -f

#Install scsi-hpvsa-5.5.0-88

esxcli software vib install -v file:scsi-hpvsa-5.5.0-88OEM.550.0.0.1331820.x86_64.vib –force –no-sig-check –maintenance-mode

reboot

thinking about it maybe you need to do that again if you had duplicate file systems, you may have removed it from one of them then then removed that device hence needing to go through the same procedure again ?

I tried it 5 times. Always after 3th restart (after setting module vmw_ahci to false) there is version 5.5.0.102.

I have the same problem as Jcob. Has anyone found a solution?

Yes I removed the 5.5.0.102 driver and next i installed the 5.50.88 driver according to the instructions. After restart I have not seen my VMs so I set module vmw_ahci to false and I restart it again. After restart is version 5.5.0.102 again.

Hey chaps,

I had completed these steps prior to finding this page after continuing to have issues on 6.5 with the .88 drivers. On previous releases this driver appeared to be stable however now I have transfer rate issues. Initially I receive a high SMB transfer rate to a virtual machine hosted on the raid 1+0 array but after a few secs the transfer rate drops from 100-150MB/s down to 9-10MB/s. I presume the fast part of the transfer is the microserver’s memory filling up as it struggles to commit to disk at speed before falling back to the 10MB/s the disk array can handle.

Any ideas? I ran through the instructions here again and ensured the .88 driver is in place and being used.

Cheers!

Actaully. Ignore the last message 🙂

Seems I can sustain 100+MB/s to this array. Issue appears to just be with ‘Open Media Vault’ installed on ESXi. Can SMB transfer to other virtual machines fine. OMV maxes out CPU waiting on disk-IO but the host itself is fine.

Will investigate further.

Ok. Well, issue with OMV write performance was my own stupid fault. I’d thin provisioned the virtual NAS’s disk. That would explain why write performance dropped as VMWare struggled to expand the VMDK file on the fly.

You can remove all my comments now as they are no long relevent to your original post.

Thanks 😉

Quick note, I was able to use wget via SSH out of the box to obtain the VIM.